Back-to-back Testing

Formal methods to control the compliance of software implementations

Back-to-back testing (B2BT) techniques are used to verify that two products of a software development process are equivalent. By running the two products “back to back” on a set of test cases, it is verified that they produce the same output. If the scenarios are chosen carefully and the two runs produce the same results, it can be inferred that the two products are equivalent with some level of confidence.

Note

This article is the full text of a paper published at the Lambda MU 23 congress

Introduction¶

Typically, these techniques are used to ensure that a model of critical software is a reliable representation of software running on its hardware target. This allows the results of validation techniques obtained from the model, such as reviews, functional tests, or formal proofs, directly to the real object. Thus, if a security property is proven on a model, and there is sufficient confidence in the tests performed in B2BT, then it is possible to deduce with some level of confidence that the property holds on the software running on its hardware target.

The model used may itself be the result of the validation. By diversifying the artefacts, and showing that they are equivalent, it can be inferred that the two diversified processes to obtain these products probably did not introduce any error.

Another possible use of these techniques is to validate a transformation performed on a first product to obtain the second. For example an automatic code generator, a compiler, a linker an optimiser. If it is possible to run (or simulate) the first product and check that it behaves identically to the second by B2BT methods, then it is possible to conclude that the tool used to perform the transformation has probably not introduced any errors.

This article is organised as follows: the first part presents the principle of B2BT and test sequence generation, illustrated around an application. The strategy used to generate the sequences is explained in the second part. The implementation of the tests around the system under test is detailed in the third part. Finally, the IV, V and VI parts present the use cases and two projects for which our B2BT solution was used.

Presentation of Systerel’s B2BT Solution¶

Problem Addressed¶

In critical systems, the standards provide some activities to control defects between the specification of a system and its implementation.

These activities include verification, testing, simulation, proof, validation and qualification of the tools used. One of the industrial problems concerns the difficulty of taking some value for the validation policy from all the work carried out on models or codes that are not yet the software executed on its execution platform.

The B2BT approach proposed by Systerel is in line with the techniques of automatic generation of test by Model Checking 1 2 3 but proposes to bring new elements of conviction to use more massively the work carried out on models or source code by an automatic generation of tests qualifying the conformity of executable software with the semantics of the model / source code.

Specificities of the B2BT Tool¶

The B2BT solution developed by Systerel is based on our existing technologies. We have therefore chosen to build our models in High Level Language (HLL) 4, the language used by our Systerel Smart Solver (S3) solutions 5. A model can be the result of different activities: either specific to B2BT activities, or reuse of validation and verification data, or automatic translation from another language, etc.

Finally, this model is used by the B2BT tool to generate test sequences.

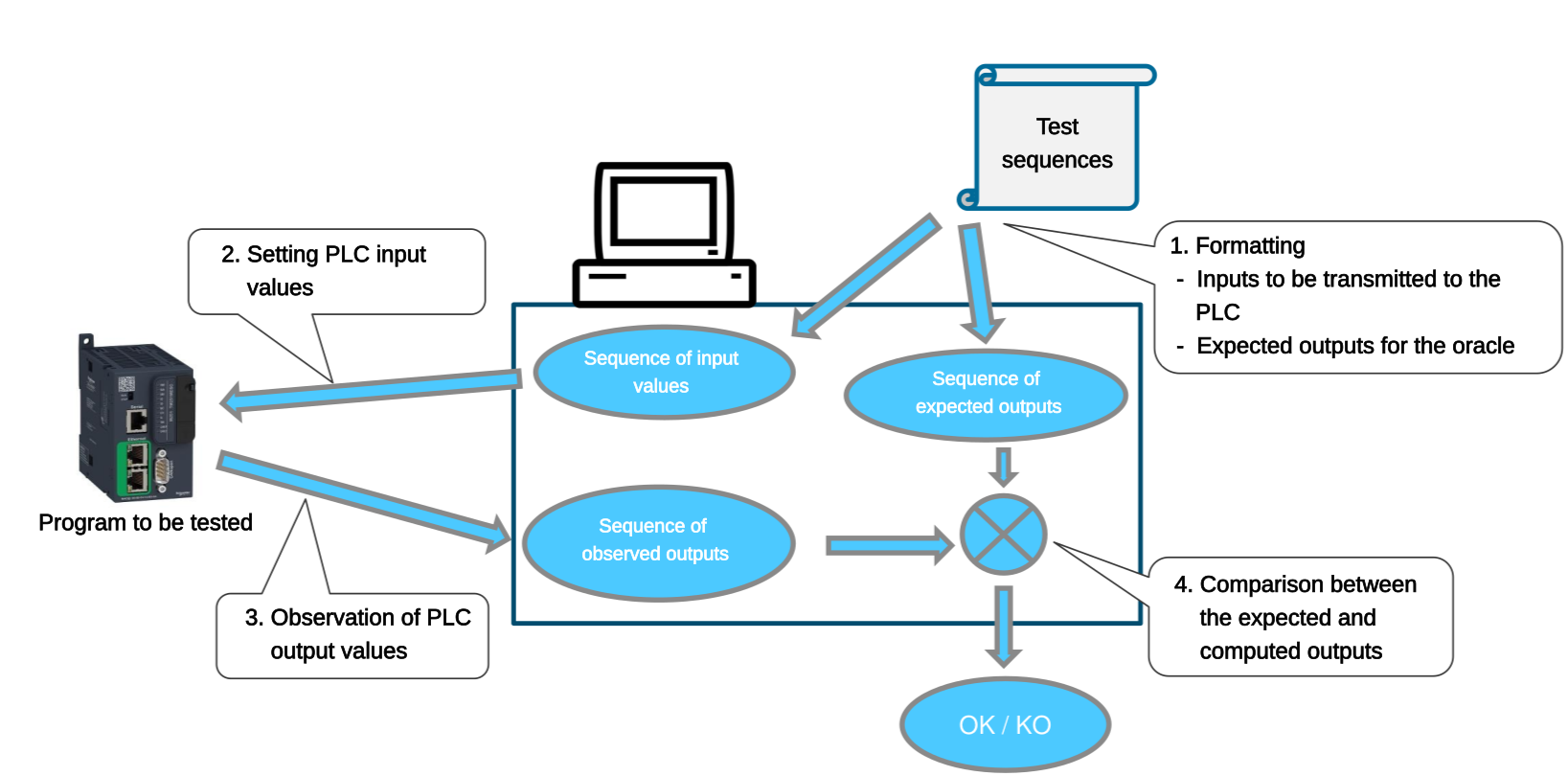

B2BT falls into the category of testing by comparison of observable behaviour and as such, the sequences generated describe for each step of the system the expected outputs according to its inputs and the state of its memories. The choice of the strategy used for the generation of the sequences, as well as the level of confidence given to these sequences, are described in the second part.

Although not the focus of this post, it is important to note that the same HLL model, can be used by our S3-core analysis engine to perform formal proof activities.

Application to a Simple Model¶

In order to illustrate the process of sequence generation, a deliberately simple, but understandable and representative software application is detailed in this section.

Consider that this application has 2 inputs, represented by two Boolean variables \(I1\) and \(I2\), and 2 outputs, represented by two Boolean variables \(O1\) and \(O2\).

The observable behaviour of the outputs as a function of the inputs is described by the following boolean equations.

Let us further consider that we have a testable device, i.e. one whose inputs can be controlled in order to control its outputs, which embeds a software implementation based on the behaviour described in (1) and (2).

Sequence Generation¶

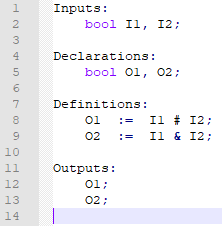

The HLL model corresponding to this system can be constructed. This model is explained in Fig. 1.

Figure 1: HLL model of the application

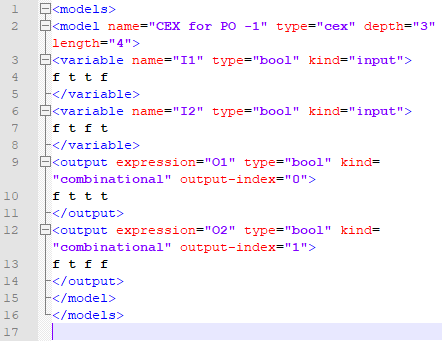

The HLL model is then used to generate the test sequences. At the

end of the generation, a test case of length 4 cycles is

obtained, as shown in Fig. 2 where each line

represents the successive states (f for False, t for True) of each

input variable (\(I1\) and \(I2\)) and each output (\(O1\) and \(O2\)).

Figure 2: Generated test sequence B2BT

Detecting Discrepancies in Behaviour¶

Let us assume that the behaviour described in the HLL model presented in the previous section has been implemented in a system. Consider the following two errors:

Implementation error in the calculation of variable \(O2\): use of an OR instead of an AND,

Error in the capture of the variable \(I1\) at each cycle: \(I1\) blocked at TRUE.

The table below presents the truth table of the system, detailing the expected behaviour and the obtained behaviour, on the test sequence obtained with the tool B2BT.

I1 |

I2 |

Expected |

Error calculation O2 |

I1 blocked to TRUE |

|||

O1 |

O2 |

O1 |

O2 |

O1 |

O2 |

||

FALSE |

FALSE |

FALSE |

FALSE |

FALSE |

FALSE |

TRUE |

FALSE |

TRUE |

TRUE |

TRUE |

TRUE |

TRUE |

TRUE |

TRUE |

TRUE |

TRUE |

FALSE |

TRUE |

FALSE |

TRUE |

TRUE |

TRUE |

FALSE |

FALSE |

TRUE |

TRUE |

FALSE |

TRUE |

TRUE |

TRUE |

TRUE |

It is noted that this single test sequence detects the two errors introduced:

For the calculation error of \(O2\), a difference in the observable behaviour is detected at the 3rd step of the sequence,

For the blocking of the variable \(I1\), a difference in the observable behaviour is detected at the 1st step of the sequence.

More generally, the test generation strategy will allow establishing a detailed mesh of the reference behaviour, from which it is possible to observe the consequences of failures in the development of the model implementation.

B2BT Test Sequence Generation Strategy¶

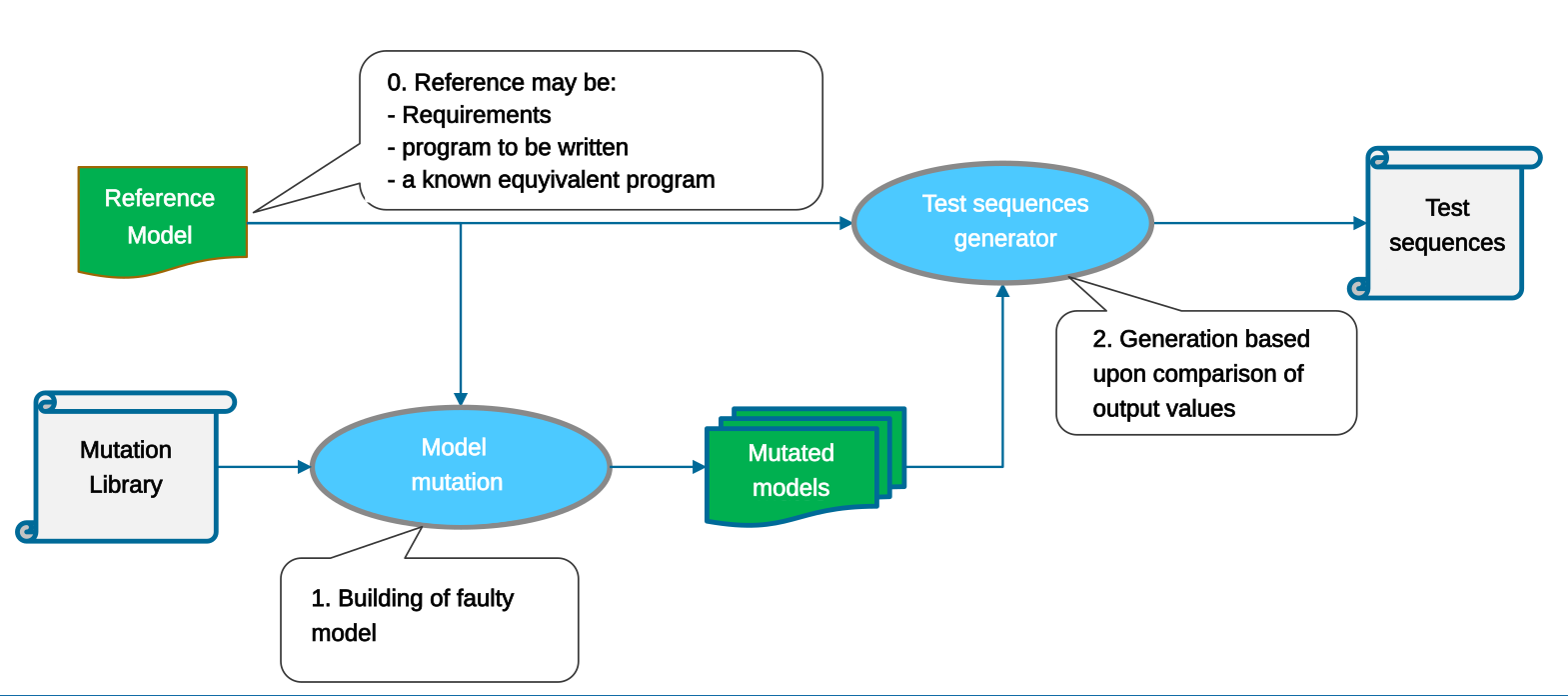

This section describes the strategy that has been chosen for the generation of the B2BT test sequences. The objective of the B2BT tool is to perform mutations on the model and then to determine a set of sequences that would detect a difference between the observable behaviour of the original model and the mutated model.

In order to determine the classes of mutations, we relied on the ability to observe a mutation on the outputs and on the semantics of the HLL of which our S3 technology can provide a finer semantically equivalent view. It is at this finer level that mutations are performed, on inputs, memories, operations. The disadvantage of this approach lies in the overabundance of possible mutations compared to reality. But it is also its strength, because the mesh thus woven is extremely fine.

The B2BT tool therefore relies on these mutation classes to direct the generation of test sequences. Using the S3-core analysis engine, we seek to generate test sequences that detect each of the possible mutations of the model, i.e. sequences of inputs to the system that would produce different outputs depending on whether they are evaluated on the original model or the mutated model.

Although this is not the objective of this strategy, our experiments have shown that it can partially cover the temporal evolution of a signal (see parts V and VI). For such a coverage, the notion of associated coverage and the possibility to define a dedicated strategy should be studied.

Implementation of the Tests¶

Once the test sequences have been generated, it is necessary to implement them on the target system. This implementation can be more or less complex depending on the characteristics of the system to be tested and the test environment available.

Indeed, the B2BT test sequences provide, for each cycle of the system, a set of input values and the expected output values. It is therefore necessary to synchronise the test tool and the system under test, to ensure that:

the system under test has time to acquire the inputs and calculate the outputs,

the test fixture checks for consistent expected and calculated output values (which correspond to the same cycle in the test sequence).

The following subsections present the four implementation cases we consider.

Tests on Host Machine / Simulator¶

The host/simulator test allows a test facility to be set up with the finest checking facilities:

The synchronisation of the execution of the system under test,

Maximum observation (internal variables become accessible).

This maximum flexibility ensures the correct timing of the inputs as well as the fine synchronisation of the outputs.

“White Box” Testing¶

A “white box” test is when the test tool and the machine to be tested meet the following conditions:

The test device interfaces with the target machine,

The test facility has read and write access to the internal variables of the system under test,

The test facility can control the start of a cycle on the target machine.

It is then possible to control the various operating cycles of the system under test or to force its variables.

“Grey Box” Tests¶

A “grey box” test is when the test tool can only read some or all of the variables in the system under test. In this configuration, it is always possible to synchronise the test equipment with the internal variables of the system under test. The system must of course have such variables (execution cycle counter, acquisition/transmission status, etc.).

“Black Box” Testing¶

“Black box” testing is when the test tool has no access to the internal variables of the system under test. Tests are particularly complex to implement in this type of configuration. It is possible to try to synchronise the test fixture and the system by setting their cycle times to close (ideally equal) values and starting them synchronously.

However, this type of configuration is much more sensitive to runtime discrepancies.

Controlling the execution of tests on a “black box” configuration may require a particular test generation strategy to make the scenario compatible with the possible shift of one cycle of the sample. Such a strategy is usually more expensive.

An alternative is to build an overlay to the system under test that embeds the test vectors and expected outputs in the system under test if its resources offer the capacity. This is a “white box” configuration, but it requires modifications to the software to make it compatible with the encapsulation.

Use Cases¶

There are multiple use cases for this technology on the critical software domain.

As it involves testing by comparing the actual output of an object under test with the expected output derived from a model, let us examine the possible use cases according to the origin of the model.

Compiler Optimisation¶

Let us first consider that the model is a semantically equivalent image of the source code. The differences that are observable at test execution may arise from the platform on which this code is executed (set of hardware resources, base software, operating systems) or from the compiler used to transform it into executable software. The defects encountered can then be reproduced and analysed to identify the root cause. In the area of critical software development, (e.g. IEC 61508-3, IEC 62138), the standards require that the tools that contribute to the production of the executable software be evaluated with respect to the level of confidence about the absence of failure in the software. Compilers belong to this class of tools. This application of the B2BT can be seen as a solution for checking the output of the compiler at runtime on the target, capturing failures that impact on the output of the executable software. This analogy also applies to the runtime platform, but also to translation tools between higher-level semantics and a compilable language.

Based on this safeguard, it is now possible to consider B2BT as a means of mastering off the shelf tools. One application could be to allow oneself to exploit more widely the power of compilers, in particular the optimisation options when they are accompanied by restrictions such as “the activation of optimisation lifts the guarantee on the order of evaluation of the parameters of a function” when the B2BT tests are successfully carried out.

Continuity of Formal Verification Activities¶

Let us then consider the case where the model used to generate the B2BT tests is not derived from the code but is developed through formal property checking activities. The interest of formal property checking is to demonstrate mathematically that RAMS requirements hold on model, whatever the sequence of inputs considered and at whatever temporal depth in the life of the system. In this case, the contribution of B2BT is to be able to demonstrate that the machine under test has no observable mutations with respect to the execution semantics of the model that supported the proof activities. When the proof obligations of the formal model are shown as proven, this proof holds on the execution semantics of the model and by transitivity on the machine under test since it does not contain any observable deviation.

In this case, B2BT complements traditional validation strategies by allowing the demonstration of compliance with RAMS requirements by proof on a formal model associated with the demonstration of compliance with the semantics underlying the proof on the machine under test by the correct execution of B2BT sequences.

Such an approach makes it possible to consider hybrid validation approaches where the proof takes over the demonstration of compliance with the RAMS requirements. A proof approach provides complete coverage with respect to RAMS in the sense that the proof provides a demonstration that whatever the sequence of events on the inputs to a model, a proof obligation holds.

In comparison, an approach using functional tests is based on scenarii. Except for a few rare situations where completeness of combinations is possible, an approach by test only provides coverage by sampling.

The strategy is therefore to develop a validation policy consisting of a proof against RAMS requirements with automatically generated B2BT tests and some functional tests against non-safety critical requirements (usability, performance, liveliness, etc.). The proof provides full coverage of the RAMS requirements. The B2BT consolidates that the RAMS requirements are based on the execution of the semantics by fine sampling the respect of the execution semantics.

It is notable that the development of a proof is less costly in terms of workload than the development of a test in terms of engineering load. Indeed, writing a proof requirement consists in formalising the “WHAT” of a requirement.

Example

Let’s take the example of a requirement where the system shall react by deactivating an output \(S\) when a quantity \(G\) exceeds a critical threshold \(Sc\) in the presence of certain conditions \(C\). With a model manipulating \(S\), \(Sc\) and \(C\), the formal writing of the proof requirement is immediate, and the proof tools are in charge of finding the proof (the possible additional human burden being devoted to guiding the proof path by intermediate lemmas when the prover fails to find the proof alone). In the case of a test approach, it is necessary, through test engineering and a thorough functional knowledge, to create the preconditions for the test to bring the system into a state where the test will make sense. In the proposed toy case, it is a matter of describing for the validation environment each step of the scenario that will bring the system into the situation where \(G\) will exceed \(Sc\) while respecting \(C\).

This simple example shows that the proof offers full coverage (whatever the value sequences on the inputs, then the RAMS property is respected) where the test only samples (in the scenario approach, we test only one trace of the system against a huge, if not infinite, amount). It also demonstrates that the engineering effort associated with proof is more efficient and more robust to changes than the engineering effort associated with testing (proof does not search for scenarios, but establishes an invariant under all circumstances and the search is delegated to the machine).

Treatment of Obsolescence¶

Finally, consider the case where the model is derived from a software version of an obsolete equipment.

It is common in the industry that an equipment qualified in the more or less recent past can no longer be maintained, due to a lack of available spare parts or even a lack of a test environment that is still operational (test servers running obsolete OSs that security policies ban or will rightly ban from the networks because of their vulnerabilities). As part of the obsolescence treatment of such equipment, it is possible to generate test sequences for compliance with the execution semantics of the obsolete code using the B2BT approach.

These tests offer a finer coverage than validation tests: the successful completion of these tests demonstrates that the new generation of equipment has no semantic alteration in relation to the execution semantics of the qualified but obsolete version.

In the event that the owner does not have or no longer has the validation test facilities used at the time, the B2BT tests provide an automatic solution for rebuilding a new generation qualification repository. This approach must be weighed against obsolescence management with a set of functional evolutions. In this case, it will be necessary to proceed in two steps: qualifying the obsolescence treatment with the help of B2BT and then adding a set of tests or proofs dedicated to the functional evolutions.

This obsolescence treatment approach can be generalised more globally to the control of software being ported to new execution platforms.

Validation of a Critical System¶

The most ambitious prospective usage will be to use the B2BT technology for a critical system, coupled with a model produced by a validation team. Indeed, in this configuration, the validation team imposes through its model, the execution semantics of the object in accordance with the client’s needs without descending into the complexity of the production of the executable software or the security execution platform. This last use case proposes a strategy for the integration of commercial off the shelf components within critical control systems.

In fact, by ensuring that the execution semantics proposed by a commercial safety computer and its programming environment remain unchanged from those of a model of the requirement, the B2BT provides elements for an answer to the suitability for use of a safety computer with its programming environment provided as a black box with safe operating conditions.

Industrial Application: Partnership with EDF¶

Implementation on EDF Models¶

In partnership with EDF, Systerel has used the B2BT tool on a Lustre model provided by EDF. This model has been developed to match typical applications used in certain command and control systems of a nuclear unit. The objective is thus to evaluate the applicability of the B2BT method to nuclear applications. Several successive steps have been implemented to allow the use of this model to perform the generation of the B2BT test sequences.

Systerel and EDF have worked on the definition of high-level coverage criteria. Three high-level coverage criteria were retained to verify that the tests generated by the B2BT solution cover the particularly sensitive and critical behaviours of the application. Although these criteria are not detailed for reasons of confidentiality, it should be noted that they were specifically defined for this project. The walk through of the test sequences, with respect to these high-level criteria, will then increase the level of confidence in the coverage of the tests by B2BT: not only are all mutations tested, but all critical paths have been walked through.

These steps are briefly described below:

Pre-processing of input files The input files, in Lustre V6 format for the purposes of the study, require translation into the HLL language,

-

Analysis of the correct definition of the model

Before launching the generation of the test sequences, a verification pass of the good design of the model is carried out using the S3 analysis engine. This check mainly consists of ensuring that the initial model is sound by confirming the absence of, for example, access to variables outside the defined bounds or division by zero.

-

Generation of test sequences

Once the model has been translated and checked, it can be passed to the test sequence generation tool B2BT which will apply the mutations and produce test sequences to detect any defects.

-

Measurement of the coverage rate according to the defined criteria

The generated test sequence is applied to the application model in order to measure the value of the coverage rate achieved for each of the defined coverage criteria.

-

Execution of the test sequence

This last step consists of playing the test sequence on the target equipment. It should be noted that if step 1 introduces faults in the semantics, then these will be detected during the execution of the test, except in the case of combined faults.

Due to the existence of long timeouts (several hundred cycles) in the application studied and the explosion of the state space inherent in the use of a proof engine at large depths, the model has been equipped to allow the control of memories and timeouts.

In addition to these two types of B2B test generation, we added some test sequence generation that focuses on higher-level test objectives.

Results¶

B2BT Generation of the Standard Model¶

The B2BT analysis of the standard model was conducted in two complementary phases. The first one, lasting 12 hours, consists in defining random test steps and allows obtaining the major part of the coverage. The second, lasting 3 days, uses the S3 analysis engine to find all the test objectives not yet covered.

At the end of the generation, we obtained 77 scenarios totalling 21606 time steps and covering 5928 B2BT test objectives out of the 6719 that the system has. Taking into account the 437 objectives supposed to be unreachable (see next section), we obtain a coverage rate of 94.6% for the detection of mutated operators.

Concerning the high-level coverage criteria, 561 objectives out of the 566 in the application model were covered. This means a coverage rate of 99.1 %. It is noteworthy that the sequences generated according to the B2BT coverage criterion, which is fundamentally different from the high-level ones, also ensure a very satisfactory coverage rate on these high-level criteria.

B2BT Generation of the Tooled Model¶

The B2BT analysis of the tooled model (controllable memory and timing) takes about 8 minutes and produces 10 scenarios totalling 87 time steps and covering 5782 B2BT coverage objectives out of the 6219 in the system (93%). Moreover, an exploration to a depth of 50 cycles did not allow covering the 437 uncovered objectives, which suggests that they are, in whole or in part, unattainable.

The analysis of the high-level coverage criteria on the scenarios obtained indicates that 544 of the 566 objectives were covered. This represents a coverage rate of 96.1%. This difference compared to the standard B2BT model is due to the fact that some of the high-level criteria are sequential. Indeed, the fact that the memories and timings are controllable reduces very significantly the number of successive test steps required to reach an objective and therefore the chances of producing the expected sequences for these criteria.

This tooling therefore drastically reduces the calculation time and the number of test steps while significantly improving test coverage. However, this solution requires the ability to control these memories and timings during the execution of the test sequence.

Generation of Sequences According to High-level Coverage Criteria Alone¶

The three high-level coverage criteria, applied to the application under study, result in a set of 566 test objectives. The S3 analysis engine finds 86 scenarios totalling 3230 time steps in 1.5 minutes, covering 563 of the 566 test objectives. It was also possible to prove that the 3 test objectives not covered were not achievable.

Although this solution offers the best result in terms of generation time, test sequence length and coverage of high-level criteria, it only tests the critical paths and not each of the operators. It therefore does not guarantee the same level of completeness of the test performed and therefore does not detect implementations whose non-critical observable behaviour may have been altered during coding or compilation.

Conclusion¶

These different experiments have shown that this automatic test sequence generation method can efficiently address industrial system validation problems and take into account material constraints (standard or tooling test bench) and complexity constraints (generation by operator mutation or by high-level criteria only) for the execution of test sequences.

Industrial Application: Interlocking Machine on a PLC¶

Within the framework of a project for the design of tramway interlocking machine, we have sought to implement a tram interlocking system on a PLCl which have the particularity of being SIL4 certified according to EN 50126, EN-50128 and EN 50129 for safety applications.

The system — a complete tram line — is divided into independent stations or zones. For this reason, a decentralised architecture with one automaton per zone was selected. Finally, in order to facilitate the evaluation activities of these decentralised subsystems, the following development strategy was considered:

Development of a generic library of tramway signalling principles in the form of Sequential Function Chart (SFC), a graphical programming language for Programmable Logic Controllers (PLC) defined in the |IEC61131-3| standard,

Development of specific applications for each station or zone using Function Block Diagram (FBD), another graphical language for PLCs defined in the IEC61131-3 standard.

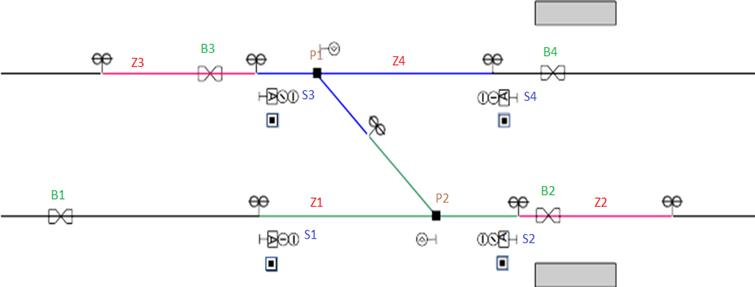

In order to test these specific applications, we chose to use the techniques of B2BT. The first tests focused on the development of the specific application for a signal zone. This zone, composed of 4 signals and 2 switches and crossings, allows the creation of 4 routes (2 of which are for lane changes). The plan of this zone is described in Fig. 3.

Figure 3: Track plan

Based on the principles of signalling in the form of SFC, the expected behaviour of this area has been implemented in the PLC.

In parallel, a HLL model of this application has been realized, in order to:

Prove the holding of the safety properties on the model,

Generate the B2BT test sequences from the model,

Execute these test sequences on the embedded application.

During the execution of the test sequences, an implementation error was quickly detected. The sequencing of the execution of the instantiated principles was not in the same order on the target and on the model. This difference modified the observable behaviour of the system, since the order in the execution priorities of the principles was no longer respected and was indeed detected during the execution of the tests.

This experimentation thus made it possible to discover a bad control of the development environment of the PLC.

Conclusions and Perspectives¶

The results we have obtained from the implementation of our B2BT solution are particularly satisfactory. They have allowed us to:

Consolidate the confidence we have in our test sequence generation strategy, whose coverage rates are very high, including when we seek to verify implementation-specific criteria,

Detect errors in implementations where the observable behaviour of the system under test was different from the expected behaviour,

Transfer the results of formal proofs obtained from the models to the software implementations of the systems.

There are still limitations in the use cases of our solution. In particular, it would be interesting to enrich the test sequence generation strategy to compensate for the lack of coverage on models containing time delays. Concerning the implementation, the testing of “grey” and “black” box systems remains complex.

There are promising avenues with these limitations:

Defining specialised generation strategies around time delays; note that this axis will be interesting to build in conjunction with the ability to abstract time at the level of execution platforms to also gain on test execution time,

Work on the means of use in relation to the fine control of the execution of the sequences or propose alternatives according to the capacities of the machines under test,

Work on the translation of the systems to isolate more precisely the memory context which makes it possible to make the approach more massively combinatorial for the machines which allow to control the memory from one cycle to another.

References

- 1

-

Simon H., Friedrich N., Biallas S., Hauck-Stattelmann S., Schlich B. & Kowalewski S, “Automatic test case generation for PLC programs using coverage metrics”, 2015 IEEE 20th Conference on Emerging Technologies & Factory Automation (ETFA) (2015), p.1-4

- 2

-

Enoiu E. P., Čaušević A., Ostrand T. J., Weyuker E. J., Sundmark D. & Pettersson P, “Automated test generation using model checking: an industrial evaluation”, International Journal on Software Tools for Technology Transfer 18(3) (2016), p.335-353

- 3

-

Enoiu E. P., Sundmark D. & Pettersson P., “Model-based test suite generation for function block diagrams using the uppaal model checker”, 2013 IEEE Sixth International Conference on Software Testing, Verification and Validation Workshops (2013), p.158-167

- 4

-

Nicolas Breton. High Level Language - Syntax and Semantics - Logical Foundation Document. [Technical Report] C672 pr4.0 rc1 A, Systerel. 2021. ⟨hal-03356342⟩

- 5

-

Breton, N., Fonteneau, Y. (2016). S3: Proving the Safety of Critical Systems. In: Lecomte, T., Pinger, R., Romanovsky, A. (eds) Reliability, Safety, and Security of Railway Systems. Modelling, Analysis, Verification, and Certification. RSSRail 2016. Lecture Notes in Computer Science(), vol 9707. Springer, Cham.